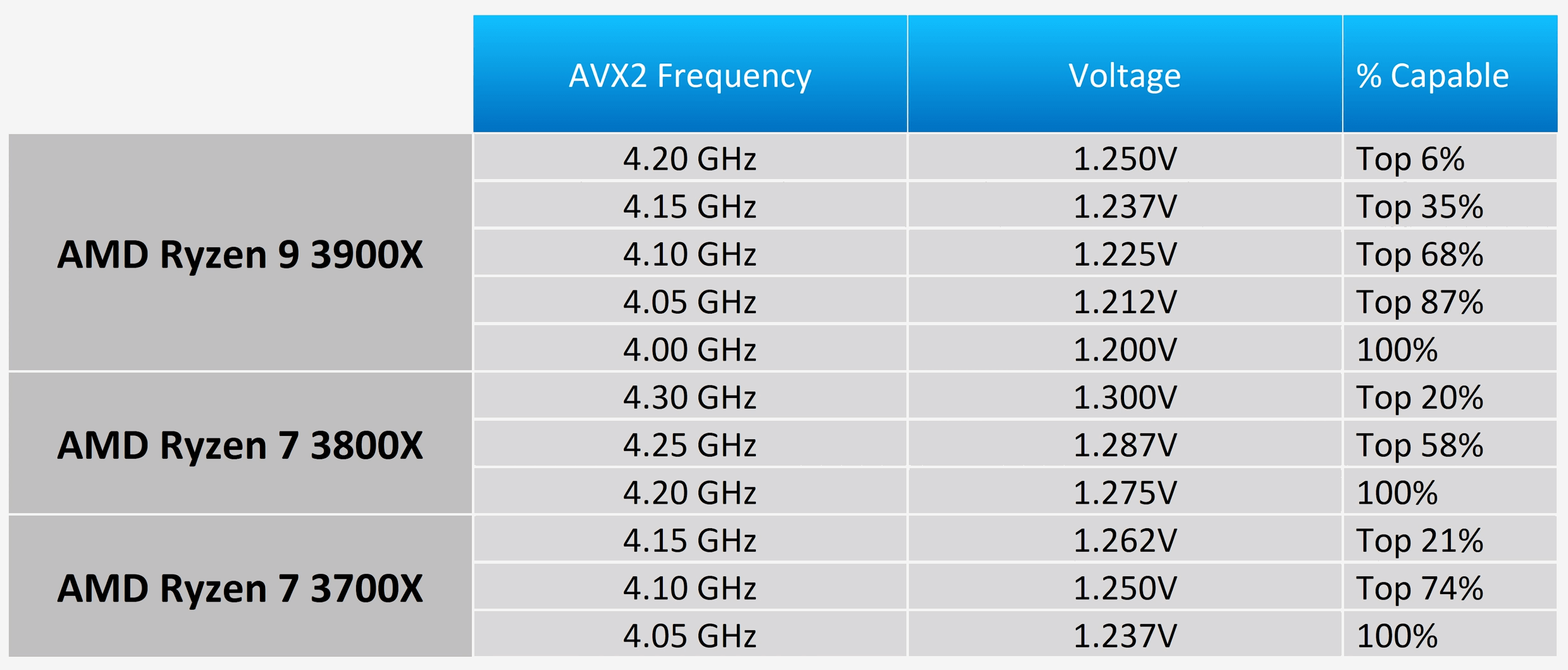

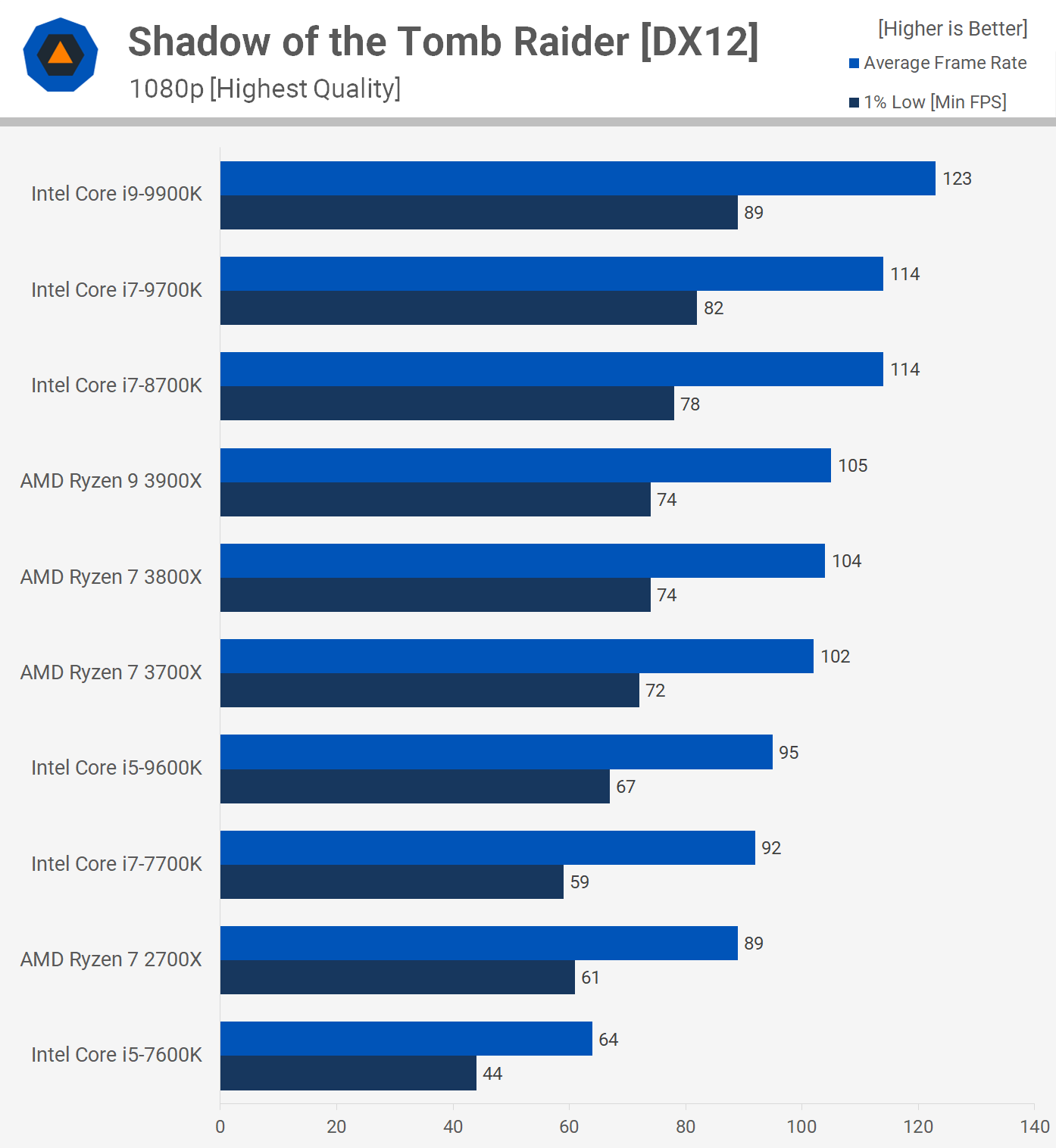

Silicon Lottery recently released some Ryzen 3000

binning data and this suggests the better quality silicon has been reserved for the 3800X. The top 20% of all 3800X processors tested passed their 4.3 GHz AVX2 stress test, whereas the top 21% of all 3700X processors were only stable at 4.15 GHz. Also, all 3800X processors passed the test at 4.2 GHz, while 3700X processors were only good at 4.05 GHz, meaning the 3800X has about 150 MHz more headroom when it comes to overclocking.

Silicon Lottery AMD Ryzen 3000 Binning Results

In other words, the average 3800X should overclock better than the best 3700X processors, but it's still a minor 6% frequency difference we’re talking about between the absolute worst 3700X and the absolute best 3800X per their testing. For more casual overclockers like us the difference will likely be even smaller. Our 3700X appears stable in our in-house stress test and to date hasn’t crashed once at 4.3 GHz. This is the same frequency limit for the retail 3800X we got. As for the TDP, that’s confusing to say the least, but we'll go over some performance numbers first and then we’ll discuss what we think is going on.