- Joined

- Jun 24, 2006

- Messages

- 42,078

- Reaction score

- 22,657

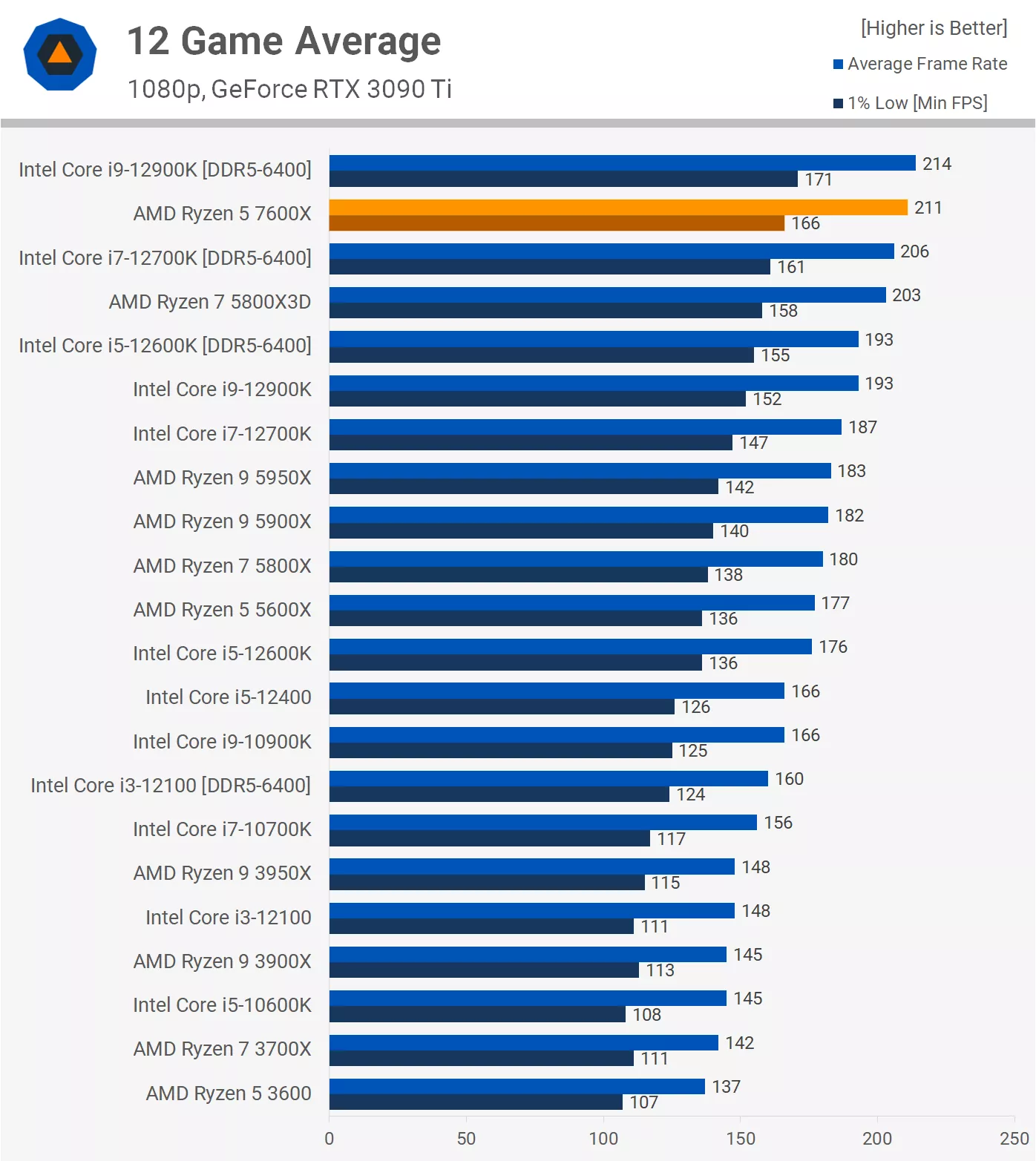

That's what everyone expected. Hell, that's what I was expecting for its matchup against Raptor Lake, not Alder Lake. And that's not just the 7600X. The 7950X is losing to the Alder Lake i7 in gaming. It's even losing with PBO Max and overclocked RAM.

Frankly, I'm worried that Intel's corrupt benchmark-fixing machine has gotten to Techpowerup. I was already worried about this with the release of the 5800X3D. Nobody else had the 5800X3D losing to the 12700K in roundups. I was able to write it off when that happened because it was only a few percent, and benchmark setups/suites vary. But this...this is beyond explanation. This is looking like a UserBenchmark moment for what has long been one of my favorite resources for tech review.

Head over to Anandtech:

https://www.anandtech.com/show/1758...ryzen-5-7600x-review-retaking-the-high-end/17

Techspot aka Hardware Unboxed"

https://www.techspot.com/review/2534-amd-ryzen-7600x/

Tom's Hardware:

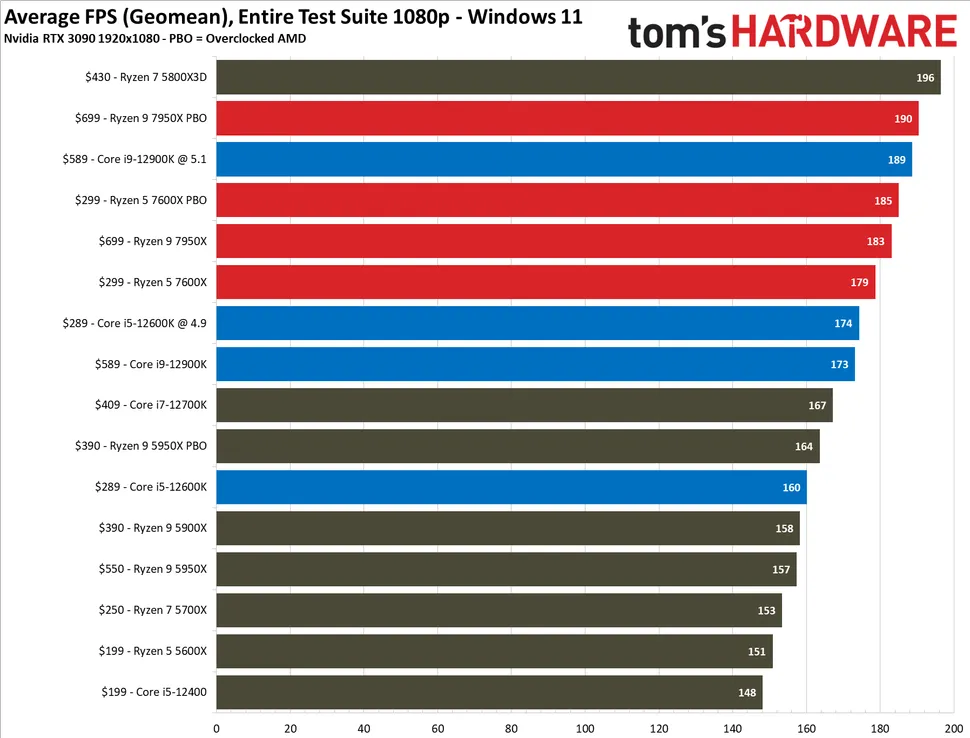

AMD Ryzen 9 7950X and Ryzen 5 7600X Review: A Return to Gaming Dominance

Tweaktown:

https://www.tweaktown.com/reviews/1...4-cpu/index.html#Gaming-and-Power-Consumption

Eurogamer aka Digital Foundry:

https://www.eurogamer.net/digitalfoundry-2022-amd-ryzen-9-7900x-ryzen-5-7600x-review?page=2

Gamers Nexus:

RIP Techpowerup.

So according to GN it sounds like In terms of gaming there’s not much real world difference in any of these GPUs still. I wonder if that will change with the 4090 at 4k

![omg [omg1] [omg1]](https://i.imgur.com/x6i0hRy.png)